After a reboot of one of our Citrix servers in a PS4 farm, the OS booted up fine, but the IMA service did not start, and would not start if you tried to start it manually.

We received the errors below in the system log:

Event ID 3389

The Citrix Presentation Server failed to connect to the Data Store. ODBC error while connecting to the database: S1000 -> [Microsoft][ODBC Microsoft Access Driver] The Microsoft Jet database engine stopped the process because you and another user are attempting to change the same data at the same time.

Event ID 3609

Failed to load plugin ImaPsSs.dll with error IMA_RESULT_FAILURE

Event ID 3609

Failed to load plugin ImaRuntimeSS.dll with error IMA_RESULT_FAILURE

Event ID 3601

Failed to load initial plugins with error IMA_RESULT_FAILURE

Event ID 7024

The Independent Management Architecture service terminated with service-specific error 2147483649 (0x80000001).

After researching the error I found that this was a corruption in the Local Host Cache database. The Local Host Cache Database allows a farm server to function in the event the datastore is unreachable and it improves performance by caching information from the ICA clients. More info below:

http://support.citrix.com/article/CTX759510

The database itself is stored in the location below:

%ProgramFiles%\Citrix\Independent Management Architecture\imalhc.mdb

To fix this issue and start the IMA service you need to ensure that the datastore server is up, and then you can recreate the database using the command below:

dsmaint recreatelhc

This will rename the existing .mdb file, create a new database and sets the registry key below to force the server to communicate with the datastore once the IMA service is restarted, in order to repopulate the LHC database:

HKEY_LOCAL_MACHINE\SOFTWARE\Citrix\IMA\Runtime\PSRequired

value = 1

The IMA service should then start up cleanly.

Wednesday, 29 February 2012

Tuesday, 28 February 2012

Certificate errors on terminal servers/Missing certificates

This was a particularly annoying problem which we were having on a Server 2008 R2/Xen App 6.5 environment, but I don't think this would be limited to Server 2008 R2 or Citrix.

Basically users were frequently getting non trusted certificate errors on some fairly mainstream websites which when tested on a desktop PC or server out of the Citrix environment, worked fine.

When you loaded the trusted root certificate authority, either via Internet explorer, or via MMC/local machine certificates, there were less trusted root certificates present when compared to the desktop PC, or another server, out of the Citrix farm. The Citrix servers were fully patched using windows update.

After investigating the way Internet trusted root certificate authorities work, I discovered that the normal process is that when an HTTPS site loads which is trusted by a certificate not in the trusted root certificate store, it will look to windows update to see if the root certificate is on Microsoft's trusted root certificate list, and if it is, it will auto-import it directly, not via a WSUS/Windows Update installation.

I then researched the group policy option which enables/disables Auto Root Certificate Update, and found this to be located in:

Computer Configuration\Adminisrative Templates\System\Internet Communication Management\Internet Communication Settings

The setting is called - Turn Off Automatic Root Certificate Update.

I ran a group policy results wizard for a standard user account to track down the GPO which was feeding this setting and found it to be enabled.

Once I disabled the setting, and ran a gpupdate /force on the servers, I opened the computer certificate MMC and opened up the Trusted Root Certificate store, noted down the number of certificates and browsed to the previously problematic sites. This time round they loaded up fine, and I noticed an extra certificate had been loaded into the Trusted Root Certificate Store.

Problem Solved.

Basically users were frequently getting non trusted certificate errors on some fairly mainstream websites which when tested on a desktop PC or server out of the Citrix environment, worked fine.

When you loaded the trusted root certificate authority, either via Internet explorer, or via MMC/local machine certificates, there were less trusted root certificates present when compared to the desktop PC, or another server, out of the Citrix farm. The Citrix servers were fully patched using windows update.

After investigating the way Internet trusted root certificate authorities work, I discovered that the normal process is that when an HTTPS site loads which is trusted by a certificate not in the trusted root certificate store, it will look to windows update to see if the root certificate is on Microsoft's trusted root certificate list, and if it is, it will auto-import it directly, not via a WSUS/Windows Update installation.

I then researched the group policy option which enables/disables Auto Root Certificate Update, and found this to be located in:

Computer Configuration\Adminisrative Templates\System\Internet Communication Management\Internet Communication Settings

The setting is called - Turn Off Automatic Root Certificate Update.

I ran a group policy results wizard for a standard user account to track down the GPO which was feeding this setting and found it to be enabled.

Once I disabled the setting, and ran a gpupdate /force on the servers, I opened the computer certificate MMC and opened up the Trusted Root Certificate store, noted down the number of certificates and browsed to the previously problematic sites. This time round they loaded up fine, and I noticed an extra certificate had been loaded into the Trusted Root Certificate Store.

Problem Solved.

Monday, 27 February 2012

HP Blade BL465 Wont Power On using iLO

I found myself doing an out of hours reboot of a

Windows Server 2008 R2 server which would not shut down via the OS. The server is an HP Blade BL465c G7 in a c7000 enclosure. So I performed

a cold boot via the HP iLO menu as I normally would. I then started to worry

when I saw the orange indicator light on the blade enclosure rather than the

normal powered on green light and no errors in the IML log.

I went into the Remote Console, the

Integrated Remote Console and the power options menu in the Onboard Administrator and clicked "momentary press" which would

normally power on the server. None of them worked - the light was still orange....

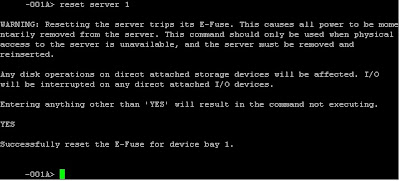

I then did some research and found that if you SSH

onto the Onboard Administrator, you can perform a reset command, which effectively

removes and reseats the blade. You can do this using PuTTY or any other SSH

client.

Once you SSH onto the client, type in the normal

username/password you use in the GUI to authenticate, make sure you identify the server

correctly by the number which appears to the left of the server name in the

device bay, then type:

reset server X (where X is the server number).

Read the warning

Type YES and push enter

The blade will disappear from the chassis, like it

would if you removed the blade physically, and after about 20-30 seconds it

reappears and it is already powered up.

Saved a night time drive into work :)

Subscribe to:

Comments (Atom)